Because there is little justification besides learning to spend the time upgrading SharpRomans to .NET core.

But learning is fun and quite enough for me.

Curious?

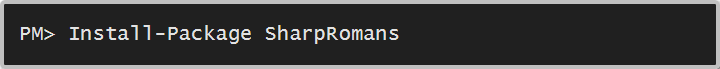

If you ever had the need of working with roman numerals and enjoy the type system, you can go to NuGet and get it:

The package now targets .NET Standard 1.1 (before it was a portable library) so people in challenged runtimes (Silverlight people in all their decadent flavors) won’t be able to get it (I don’t think they complaint anymore ).

That means that the IConvertible features are out of the door to reach as many platforms as possible. And, besides, I do not think it ever made much sense anyway.

Behind the scenes

For such a small change (and a breaking one no less) there was a ton of soul breaking work to be done, mainly having to do with unsupported tooling.

Native Taste

Whereas in other OSS of mine (NMoneys and Testing,.Commons) I approached a dual project approach to maintain the features for the users targeting the older frameworks without having to raise the minimum framework version for little reason, for SharpRomans (harsh as it sounds) I felt no remorse to leave very few users behind. So, instead, I maintain a single project targeting the netstandard world.

That means leaving behind developers (if any) that have not jumped the wagon of Visual Studio 2017 or the .NET Core SDK world. But again, zero remorse for the even smaller user base.

Going native has a nicer side-effect: all scripting done in the build script is performed via dotnet commands and, let me tell you, that cut the size of the build script from 98 lines to 57. That is huge gains for such a simple script (considering that the newer one is more full featured than the older).

Vintage scent

Since I went all “netcore native” and NUnit still does not support the dotnet test command (it seems it does now ) made me look away from NUnit towards the only supported testing framework that was supported (I refuse to use MSTest, call me a bigot): xUnit.

A lot of people like the simplicity of the framework. For me, removing a handful of attributes while having an awful, terribly dated default assertion library was not a step forward at all.

I know that one can run whichever assertion library one feels like (Shouldly, Fluent Assertions,…). Hell, I could have even used NUnit’s sweet constraint model for the assertions only. But I did not feel like bringing another dependency.

What I liked the most, however, was not its simplicity, but the somewhat complex collection fixtures feature that allowed me to run across-fixtures initialization code before any test was run.

That and the extensible trait model that helped not only to categorize tests, but enable some interesting reflection scenarios for documentation generation.

BDD refresh

I have written about BDD before. And then, I mentioned BDDfy, which happens to support .NET Core, unlike the hardly supported StoryQ.

It turns out that migrating all the scenarios from one framework to the other was not at all an easy task. It required a crapload of boring text search&replace. It now dawns on me that I might have made it more interesting by using Roslyn, but I do not think it would have saved me any time (but maybe the pain might have been spared).

I am cool enough with the framework and thrilled about the out-of-the box markdown support and how easy is to extend. I took advantage of that fact when I created a processor that instead of writing one big markdown file with all the stories, it is able to write one file per story, so that I can easily change the specification that lives in the project’s Wiki.

Two things I would change (or even better, contribute to the project):

- fix the documentation site. A pity that a well documented project seems like it is not anymore because of broken links and missing images

- implement better way to inject step arguments in the description of the step. Right now one has to rewrite the step narrative (injecting the parameter placeholders). This way works fine, except that one has to repeat oneself: name the method and rewrite the narrative. A better approach was taken by StoryQ by performing a parameter substitution of tokens in the method name.

Another way of scripting

I recently learnt about Invoke-Build as an alternative to the venerable PSake. So I thought I would give it a shot since I was changing majority of the build script anyway.

And to be perfectly honest, I did not even use the feature I thought I was going to like more: detection of the correct version of MSBuild (because I am using dotnet instead and expect it to be in the path). So, besides some nifty composition of tasks, I do not think I am getting anything from it, that I would not get from PSake for the complexity of my scripts anyway.

Was it worth it?

Surely it was. And very educative for .NET Core concepts such as multi-targeting and learning about xUnit.

But to be honest, I would not like to go through the hell of migrating all BDD scenarios again.